Controlled Dataset Integrity Outline for 6512547042, 7862790656, 965648604, 648621310, 8001231003, 1122330027

The integrity of controlled datasets such as 6512547042, 7862790656, 965648604, 648621310, 8001231003, and 1122330027 is crucial for maintaining reliable decision-making frameworks. Various factors can threaten data accuracy, including human error and system vulnerabilities. To mitigate these risks, a structured approach focusing on validation processes and governance is necessary. Identifying and addressing potential weaknesses can significantly enhance the quality of insights derived from these datasets. What specific strategies will prove most effective in this endeavor?

Importance of Dataset Integrity

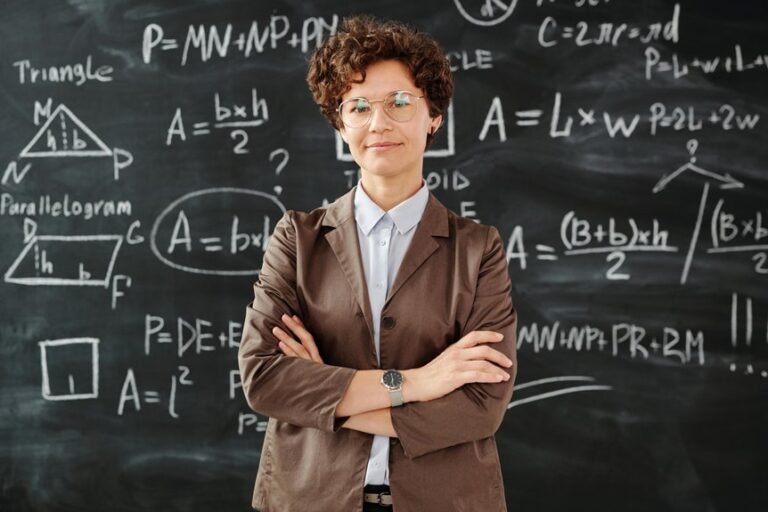

In the realm of data management, the integrity of datasets is paramount, as even minor discrepancies can compromise the validity of research outcomes.

Data trustworthiness hinges on robust verification processes, ensuring that each dataset remains accurate and reliable. Researchers must prioritize these processes to uphold the credibility of their findings, fostering an environment where freedom of inquiry is supported by sound, trustworthy data.

Best Practices for Maintaining Data Accuracy

Although maintaining data accuracy may seem straightforward, it requires a systematic approach that incorporates multiple best practices.

Effective methods include rigorous data validation, regular audits, and comprehensive user training. Implementing automated checks and version control further enhances integrity, while access controls ensure suitable permissions.

Moreover, employing error tracking and data cleansing techniques is crucial for identifying discrepancies and maintaining the overall accuracy of datasets.

Risks of Compromised Datasets

Compromised datasets pose significant risks that can undermine the integrity of data-driven decision-making processes.

Data breaches expose security vulnerabilities, leading to trust erosion among stakeholders. Organizations may face compliance issues, resulting in regulatory penalties.

Furthermore, the financial losses incurred from compromised datasets can trigger operational disruptions, ultimately jeopardizing long-term sustainability and effectiveness in a competitive landscape reliant on accurate and secure data management practices.

Framework for Robust Data Management

Developing a comprehensive framework for robust data management is essential for organizations aiming to safeguard their datasets and enhance decision-making accuracy.

This framework should encompass stringent data governance practices, adherence to compliance standards, and thorough risk assessment protocols throughout the data lifecycle.

Furthermore, implementing audit trails and access controls will ensure accountability, facilitating transparency and security in data management processes.

Conclusion

In conclusion, ensuring the integrity of datasets like 6512547042, 7862790656, and others is akin to constructing a sturdy dam against the torrents of data inaccuracies and breaches. By implementing rigorous validation processes, systematic audits, and stringent governance, organizations can safeguard against potential risks and enhance decision-making quality. With a commitment to maintaining data accuracy and reliability, stakeholders can cultivate trust and confidence in their datasets, ultimately leading to more informed and effective outcomes.